A new 3D-printed prosthetic hand can learn the wearers’ movement patterns to help amputee patients perform daily tasks, reports a study published this week in Science Robotics.

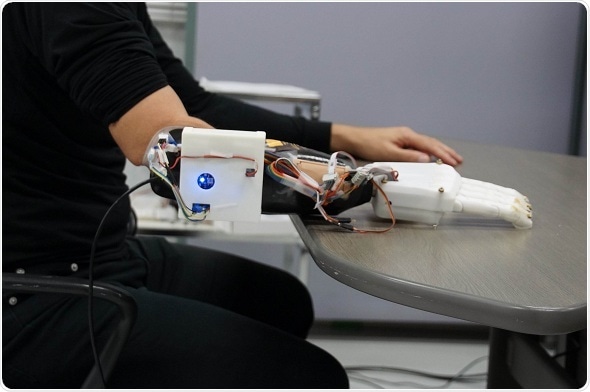

The prosthetic hand and socket. The hand is controlled by the Cybernetic Interface attached to the socket. Credit: Hiroshima University Biological Systems Engineering Lab. License: Image may be reused only with attribution to Hiroshima University

Losing a limb, either through illness or accident, can present emotional and physical challenges for an amputee, damaging their quality of life. Prosthetic limbs can be very useful but are often expensive and difficult to use. The Biological Systems Engineering Lab at Hiroshima University has developed a new 3D printed prosthetic hand combined with a computer interface, which is their cheapest, lightest model that is more reactive to motion intent than before. Previous generations of their prosthetic hands have been made of metal, which is heavy and expensive to make.

Professor Toshio Tsuji of the Graduate School of Engineering, Hiroshima University describes the mechanism of this new hand and computer interface using a game of “Rock, Paper, Scissors”. The wearer imagines a hand movement, such as making a fist for Rock or a peace sign for Scissors, and the computer attached to the hand combines the previously learned movements of all 5 fingers to make this motion.

The patient just thinks about the motion of the hand and then the robot automatically moves. The robot is like a part of his body. You can control the robot as you want. We will combine the human body and machine like one living body.”

Professor Toshio Tsuji, Hiroshima University

Electrodes in the socket of the prosthetic equipment measure electrical signals from nerves through the skin— similar to how an ECG measures heart rate. The signals are sent to the computer, which only takes five milliseconds to make its decision about what movement it should be. The computer then sends the electrical signals to the motors in the hand.

The neural network (named Cybernetic Interface), that allows the computer to “learn”, was trained to recognize movements from each of the 5 fingers and then combine them into different patterns to turn Scissors into Rock, pick up a water bottle or to control the force used to shake someone’s hand.

This is one of the distinctive features of this project. The machine can learn simple basic motions and then combine and then produce complicated motions.”

Professor Toshio Tsuji, Hiroshima University

Hiroshima University Biological Systems Engineering Lab tested the equipment with patients in the Robot Rehabilitation Center in the Hyogo Institute of Assistive Technology, Kobe. The researchers also collaborated with the company Kinki Gishi to develop the socket to accommodate the amputee patients’ arm.

Seven participants were recruited for this study, including one amputee who had worn a prosthesis for 17 years. Participants were asked to perform a variety of tasks with the hand that simulated daily life, such as picking up small items, or clenching their fist. The accuracy of prosthetic hand movements measured in the study for single simple motion was above 95 %, and complicated, unlearned motions was 93%.

However, this hand is not quite ready for all wearers. Using the hand for a long time can be burdensome for the wearer as they must concentrate on the hand position in order to sustain it, which caused muscle fatigue. The team are planning on creating a training plan in order to make the best use of the hand and hope it will become an affordable alternative on the prosthetics market.

Source:

Journal reference:

Furui, A. et al. (2019) A myoelectric prosthetic hand with muscle synergy-based motion determination and impedance model-based biomimetic control. Science Robotics. doi.org/10.1126/scirobotics.eaaw6339.