Chest radiography requires significant training and experience for correct interpretations. Studies have evaluated AI models' ability to analyze chest radiographs, leading to the development of AI tools to assist radiologists. Moreover, some AI tools have been approved and are commercially available.

Studies evaluating AI as a decision-support tool for human readers have reported enhanced performance of readers, particularly among readers with less experience. Nevertheless, the clinical use of AI tools for radiological diagnosis is in the nascent stages. Although AI has been increasingly used in radiology, there is a pressing need to evaluate them in real-life scenarios.

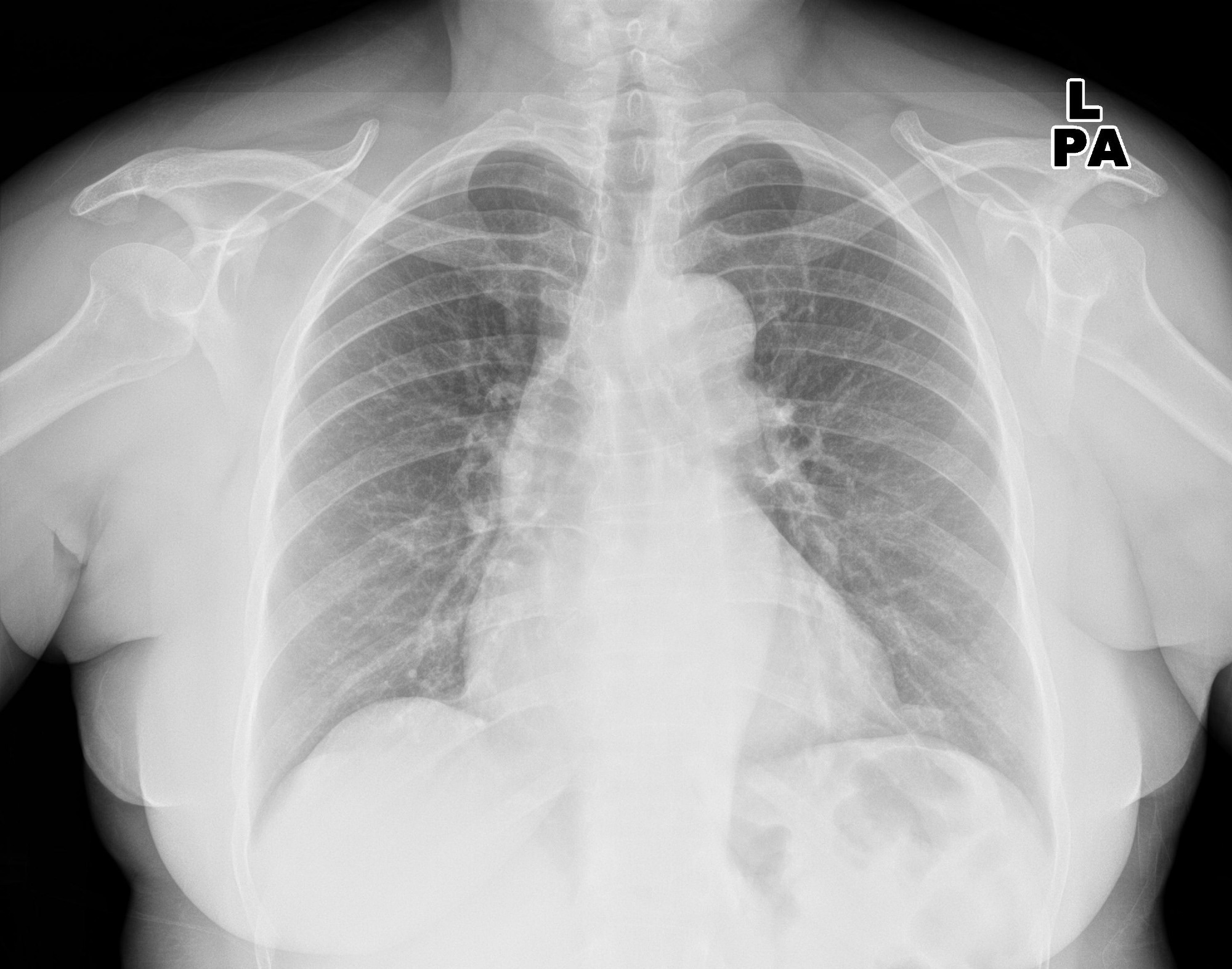

Study: Commercially Available Chest Radiograph AI Tools for Detecting Airspace Disease, Pneumothorax, and Pleural Effusion. Image Credit: KELECHI5050 / Shutterstock

Study: Commercially Available Chest Radiograph AI Tools for Detecting Airspace Disease, Pneumothorax, and Pleural Effusion. Image Credit: KELECHI5050 / Shutterstock

About the study

In the present study, researchers evaluated commercial AI tools in detecting common acute findings on chest radiographs. Consecutive unique patients aged 18 or older with chest radiographs from four hospitals were retrospectively identified. Only the first chest radiographs of patients were included. Radiographs were excluded if they were 1) duplicates from the same patient, 2) from non-participating hospitals, 3) missing DICOM images, or 4) had insufficient lung visualization.

Radiographs were analyzed for airspace disease, pleural effusion, and pneumothorax. Experienced thoracic radiologists blinded to AI predictions performed the reference standard assessment. Two readers independently labeled chest radiographs. Readers had access to patients' medical history, including their prior or future chest radiographs or computed tomography (CT) scans.

A trained physician extracted labels from radiology reports. The diagnostic accuracy assessment did not include reports considered insufficient for label extraction. Four AI vendors [Annalise Enterprise CXR (vendor A), SmartUrgences (B), ChestEye (C), and AI-RAD Companion (D)] participated in the study.

Each AI tool processed frontal chest radiographs and generated a probability score for target finding(s). Probability thresholds specified by manufacturers were used to compute binary diagnostic accuracy metrics. Three tools used a single threshold, whereas one (vendor B) used both sensitivity and specificity thresholds. AI tools were not trained on data from participating hospitals.

Findings

The study included 2,040 patients (1,007 males and 1,033 females) with a median age of 72. Among them, 67.2% did not have target findings, while the remainder had at least one target finding. Eight and two patients had no AI output from vendors A and C, respectively. Most patients had prior/future chest CT scans or radiographs. Almost 60% of patients had ≥ 2 findings, and 31.7% had ≥ 4 findings on chest radiographs.

Airspace disease, pleural effusions, and pneumothorax were identified on 393, 78, and 365 chest radiographs upon reference standard examination, respectively. An intercostal drainage tube was present in 33 patients. Sensitivities and specificities of AI tools were 72% to 91% and 62% to 86% for airspace disease, 62% to 95% and 83% to 97% for pleural effusion, and 63% to 90% and 98% to 100% for pneumothorax, respectively.

Negative predictive values remained high (92% to 100%) across findings, while positive predictive values were lower and variable (36% to 86%). Sensitivities, specificities, and negative and positive predictive values differed for similar target findings by AI tool. Seventy-two readers from different radiology sub-specialties validated at least one chest radiograph.

The false-negative rate for airspace disease was not different between clinical radiology reports and AI tools, except when vendor B sensitivity threshold was used. However, AI tools had a higher false-positive rate for airspace disease than radiology reports. Likewise, the false-negative rate for pneumothorax did not differ between radiology reports and AI tools, except when vendor B specificity threshold was used.

AI tools had a higher false-positive rate for pneumothorax than radiology reports, except when vendor B specificity threshold was used. Vendor A had a lower rate of false negatives than radiology reports for pleural effusion; vendors B and C had higher rates than radiology reports. Three tools had a higher rate, and one had a lower rate of false positives for pleural effusion than radiology reports.

Conclusions

Taken together, the findings suggest that AI tools had moderate to high sensitivity and remarkable negative predictive values for identifying pleural effusion, airspace disease, and pneumothorax on chest radiographs. However, their positive predictive values were variable and lower, and the false-positive rates were higher than radiology reports.

The specificity of tools declined for chest radiographs and anteroposterior chest radiographs, with multiple findings for airspace disease and pleural effusion relative to those with a single finding. Also, notably, many errors made by AI would be impossible/problematic for readers to identify without having access to additional imaging or patient history.