In this interview, Dr. Bernardo Cordovez describes the current technologies used to analyze nanoparticle activity and the development of a new nanophotonic chip that can be used to characterize nanoparticles.

What is nanoparticle analysis and what biomedical applications does it have?

Nanoparticle analysis is a very important field. Basically, we live in a world that is immersed in nanoparticles. From the paint we use, to the processes that go on within our very own bodies, nanoparticles play a role and because there are so many nanoparticles everywhere, they need to be analyzed for a variety of reasons.

Science Photo | Shutterstock

Science Photo | Shutterstock

In industrial processes, for example, these particles need to be analysed in quality control processes to ensure they meet spec. In the more medically orientated fields, nanoparticle analysis is needed to ensure the purity of drug formulations.

Drug manufacturers have found that, aside from the protein drugs they are trying to manufacture, their formulations contain a lot of unwanted outliers and contaminants and these contaminants come in many different shapes and forms.

Proteins can clump and form protein aggregates that can be very immunogenic, basically encouraging the body to attack the drug rather than accepting it. In addition, little pieces of plastic or metal particles can be found in the formulations and these cause a lot of undesired effects.

There's currently no viable tool available that can determine the presence of these nanoparticle proteins or contaminants.

We're basically making tools that serve a very unique role in the nanoparticle analysis arena, where they have tremendous impact in their application to the biomedical field. Our technology can be used to determine whether foreign contaminants are present or by measuring one nanoparticle at a time.

In what ways are current nanoparticle analysis systems limited?

That's a very important question. The vast majority of nanoparticle analysis technologies work by making bulk or average measurements. Probably the most commonly used method is called dynamic light scattering.

In dynamic light scattering, you basically have a cuvette that contains billions and billions of the particles of interest that you want to study. You then shoot a laser at this cuvette which bounces off of these particles, creating a light scattering signature that can be used to obtain a mean measurement of what the particle size distribution is. Basically, you can probe the entire population in one go and get a single measurement.

However, although it’s a great tool, one of the biggest problems with interrogating every single particle at the same time, is that you miss out on a lot of very crucial information. If there are any outliers or any low contaminants, those would all be washed up in the same signal and go undetected which can be disastrous in drug formulations or nanoparticle formulation processes.

Also, with the current technologies the particles are not being measured one by one. You may get a great overall population measurement, but different outliers will be missed, along with potentially different population distributions and so on.

What we're basically doing is improving nanoparticle analysis by increasing the resolution and tapping into very important applications, including measuring protein aggregation in very meaningful ways never possible before.

Also, because we can look at single particles at a time, we can actually characterize those individual particles. We can tell whether they're well coated or not and, potentially, gain more information about their very morphology – what they look like, what their shape is.

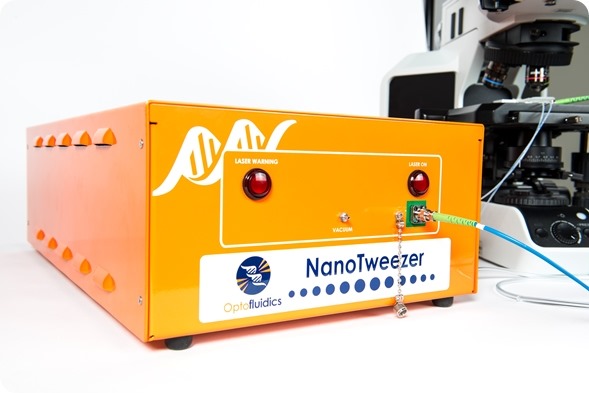

Please can you give a brief introduction to the NanoTweezer and explain how it overcomes some of these limitations?

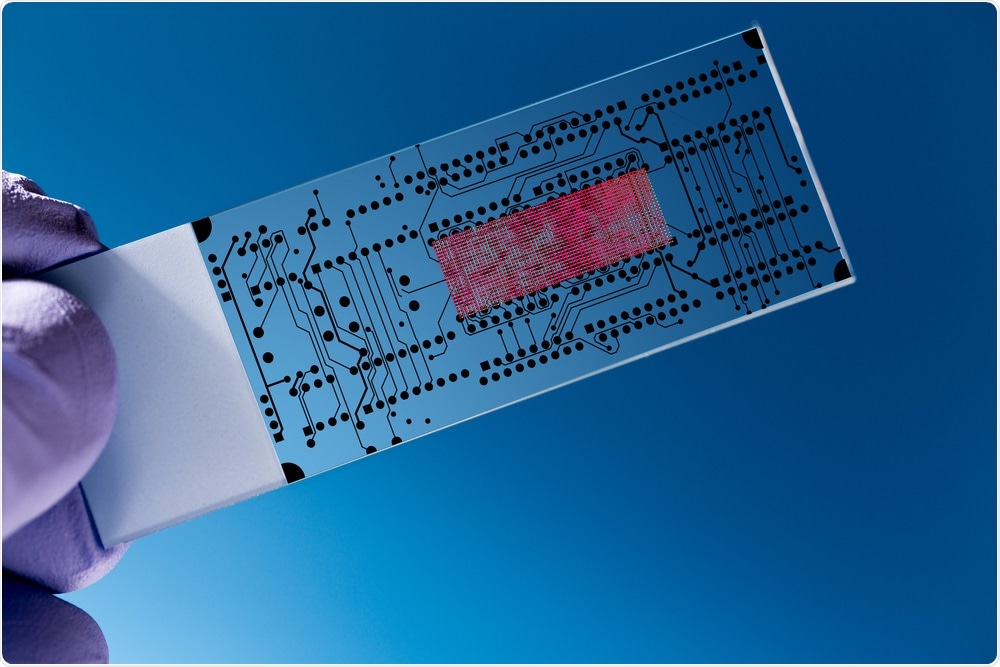

The NanoTweezer is a technology developed at Cornell University and is basically a photonic chip. It is composed of little optical fibers patterned on a chip and because these fibres are so small, we can tightly confine and shuttle a lot of light through them. You can then use these strong optical forces to trap and analyse nanoscale particles.

The optical tweezing technology has existed for several decades. What we do differently is, firstly, we make smaller light spots and confine the light more, meaning we can actually trap smaller particles, one at a time. We can manipulate the particles and bring them to the location where we're going to study them.

The second difference is that, as these particles interact with the optical fibers, they scatter a lot of light, a lot more light than the traditional free-space systems, where a laser beam just shoots at something. We get a lot more light and a lot more signal which mean that as well as being able to look at one particle at a time, we can also irradiate each particle with a lot of light and really increase our signal-to-noise ratio and therefore measure particles smaller and more complicated than every possible before.

We can get a lot of information on single particles and then use our software and processing capabilities to transform that into meaningful quantities such as particle shape, particle coating, and of course, size.

What does the NanoTweezer look like and how does it work?

Basically, our system is a microscope bolt on tool that consists of three components. Our power supply is actually the laser. Then, a fluidic delivery system in the form of a syringe pump allows people to deliver their reagents of interest into the heart of our technology, which is the chip.

These nanophotonic chips have embedded microfluidic elements – very small microfluidic channels that are used to deliver tiny amounts of fluid and reagents to the optical fibers on the chip. That's basically the heart of the technology.

Because these chips are really small and they need to fit on your microscope, we have designed a microscopic mount that provides a stage for people to simply place the chip on so they can look at it through the microscope.

So, in summary, there are the chips, which have photonic microfluidic elements; there's the instrument, which drives the chip with a laser source; a syringe pump that delivers the reagent and the interface, which is the mount.

How was the NanoTweezer developed?

It started in the academic environment at Professor David Erickson's group at Cornell and was owned by Cornell. It's a technology that has featured in Nature Magazine. David Erickson and myself, as his first PhD graduate student, started Optofluidics, although I wasn’t an inventor of the technology.

Our vision was to commercialize this technology to service the nanoparticle manipulation and analysis sectors. At the very onset, we knew we had a phenomenal manipulation technology but, as time evolved and we spoke to more and more customers, we realized that most of the value, of course, is in the data. We realized we not only had the world leading nanomanipulation technology, but also a phenomenal tool in nanoparticle analysis.

When we started the company, we were funded by the U.S. Federal Government through something called the Small Business Innovation Research Awards and through different government agencies, including the National Science Foundation, the Defense Advanced Research Projects Agency and now, the NIH.

We are also venture-backed by a bioventures group called BioAdvance, who are our partners and a great resource.

The NanoTweezer has recently been listed as a finalist for the Prism Awards. What impact do you hope this will have?

I think it will have a phenomenal impact because people are recognizing that we're really making more leading tools here. The Prism Awards is an international competition and there are only three finalists in our area – Life Science and Biophotonics – so we're very honored to be here.

We love the fact that we are getting this visibility and we're really trying to capitalize on it. We are particularly excited because, in essence, this technology is optics and this is the world’s leading optics conference. The first conferences that we ever went to were actually SPIE, followed by Photonics West and that's because people in the field of optics could understand our technology before a lot of other people could at the beginning.

In many ways, I think that the visibility that we're going to be getting is going to be quite terrific and we're really hoping to capitalize on it.

Please can you tell us more about your team?

Regarding our team, David Erickson and myself are co-founders. The other co-founder is also the CTO and President of the company, Rob Hart. Rob and I basically handle day-to-day activities and he's actually one of my best friends too, so it's all in the family here.

We have a phenomenal team of engineers, we're very tech-centric, and we also have a phenomenal board with a great deal of industry expertise.

What are Optofluidics’ plans for the future?

Our long-term objective is to become the world leading nanoparticle analysis company. Now that we have had our first couple of systems installed and we have launched, the short-term objective is to get more orders by servicing our clients' needs.

The first thing we need to do is focus on the business, focus on selling and getting instruments out there that are useful to scientists and getting people to use and validate our technology so it can be taken to the next level. We would like to make it a must-have for the pharma industry, for formulation quality control in industrial processes, in the analysis of food pigments, in the beverage industry, the every industry that uses emulsions, and so on.

The instrument that we have right now is a microscope add-on tool. So it's something that keeps the researchers from not having to spend, say, $200,000 on a single tool because it can be combined with their microscopy which they already own to manipulate and excite the particles while using their own system at the detection end. As we get the customer feedback that we need, we're going to show how it's very simple to use instruments and then cater for one or two very specific applications.

What do you think the future holds for nanoparticle analysis?

I think that more and more companies are starting to move away from probing particles a billion at a time and are starting to look at single particle measurements. We're entering a new wave in technology that is very meaningful, where we can look at one or two particles at a time and that's really important. In academia, a lot of the work is now in the study of single molecules.

I think that as time goes on, the regulatory requirements are getting much, much more stringent. In the biomedical field particularly, particle analysis related to drug formulations is going to get much, much bigger. Right now, for example, there are no clear cut regulations with regard to protein aggregates that are smaller than a certain size. This is mainly because the regulatory agencies are waiting for technologies like our own to make this possible. It is a huge opportunity and we are looking to capitalize on it.

With the evolution of technologies like ours that can tell you whether you have small particle clumps or contaminants, regulations will follow. Then nanoparticle analysis will most definitely grow as a result of these regulations becoming more stringent, because the technology will be available.

Where can readers find more information?

https://halolabs.com/

About Dr. Bernardo Cordovez

Dr. Bernardo Cordovez is co-founder and Chief Executive Officer of Halo Labs. Prior to starting the company, he was a Postdoctoral Associate at Cornell University where he also obtained his M.S and Ph.D. degrees.

Dr. Bernardo Cordovez is co-founder and Chief Executive Officer of Halo Labs. Prior to starting the company, he was a Postdoctoral Associate at Cornell University where he also obtained his M.S and Ph.D. degrees.

Dr. Cordovez’ wide range of experience are focused in the areas of microfluidics and nanophotonics and span applications including biomolecule analysis, trapping and detection, drug delivery, bioenergy production, and data storage. In 2012 he was named on of Philadelphia’s top 30 under 30 entrepreneurs.