Scientists have figured out how to translate brain waves into speech using Artificial Intelligence (AI). The results of this breakthrough were detailed in the latest issue of Scientific Reports.

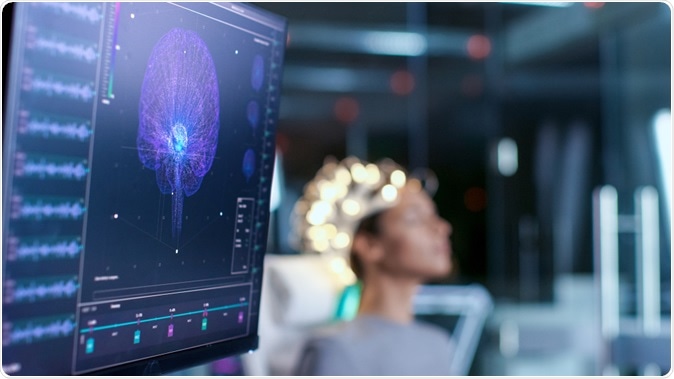

Scientists turn brain waves into speech using AI. Image Credit: Gorodenkoff / Shutterstock

Nima Mesgarani, senior author of the study from Zuckerman Institute at Columbia University said, “Our voices help connect us to our friends, family and the world around us, which is why losing the power of one's voice due to injury or disease is so devastating.” Mesgarani explained, “With today's study, we have a potential way to restore that power. We've shown that, with the right technology, these people's thoughts could be decoded and understood by any listener.”

The researchers explain that the brain cells or the nerve cells neurons, are stimulated when humans hear someone speak or hear a sound. This generates brain waves or brain patterns. This helped develop neuroprosthetics that could be the connection between the brain and the computer and help researchers decode the meaning of the waves.

This team thus worked on development of a technique that could reconstruct the auditory stimuli using a neural network. There was an auto-encoder that helped convert the audio signals to spectrograms. The machine used 80 hours of recorded speech to convert it into signals based on the frequencies of the speech.

As a next step the researchers placed electrodes onto the brains of five participants who would undergo brain surgery for epilepsy. These patients were being treated by Dr Ashesh Dinesh Mehta at the Northwell Health Physician Partners Neuroscience Institute. Now they recorded the electrical activity of the brain. Each of these participants had normal hearing. The participants were made to hear short stories for 30 minutes. The recording was randomly paused and the participant was asked to record the last heard sentence onto a vocoder. This coder of voices could map the pattern of brain waves made by audible speech.

As the next experiments, all participants were asked to hear a string of 40 digits – zero to nine. The brain signals were recorded and using the vocoder audio signals were generated. These signals were then fed back to the autoencoder for analysis and the system was made to repeat the reconstructed digits.

Mesagarani explained, “We found that people could understand and repeat the sounds about 75 per cent of the time, which is well above and beyond any previous attempts. The sensitive vocoder and powerful neural networks represented the sounds the patients had originally listened to with surprising accuracy.”

Researchers add that as of now only the signals that are heard by the participants were decoded and the thoughts could not be decoded. Further only digits were reconstructed and not more complicated numbers.

Mesagarani said that if they could take this forward and successfully decode a thought such as a person wishing for a glass of water and translate it into speech, it could be a “game changer”. He said, “It would give anyone who has lost their ability to speak, whether through injury or disease, the renewed chance to connect to the world around them.”