About the study

In the present study, researchers used stimulus reconstruction to examine how the brain processed music. They implanted 2,668 electrocorticography (ECoG) electrodes on 29 neurosurgical patient's cortical surfaces (brain) to record neural activity or collect their intracranial electroencephalography (iEEG) data as they passively listened to a three-minute snippet of the Pink Floyd song: "Another Brick in the Wall, Part 1."

Using passive listening as the method of stimulus presentation prevented confounding the neural processing of music with motor activity and decision-making.

Based on data from 347/2668 electrodes, they reconstructed the song, which closely resembled the original one, albeit with less detail, e.g., the words in the reconstructed song were much less clear. Specifically, they deployed regression-based decoding models to accurately reconstruct this auditory stimulus (in this case, a three-minute song snippet) from the neural activity.

In the past, researchers have used similar methods to reconstruct speech from brain activity; however, this is the first time they have attempted reconstructing music using such an approach.

iEEG has high temporal resolution and an excellent signal-to-noise ratio. It provides direct access to the high-frequency activity (HFA), an index of nonoscillatory neural activity reflecting local information processing.

Likewise, nonlinear models decoding from the auditory and sensorimotor cortices have provided the highest decoding accuracy and remarkable ability to reconstruct intelligible speech. So, the team combined iEEG and nonlinear decoding models to uncover the neural dynamics underlying music perception.

The team also quantified the effect of dataset duration and electrode density on reconstruction accuracy.

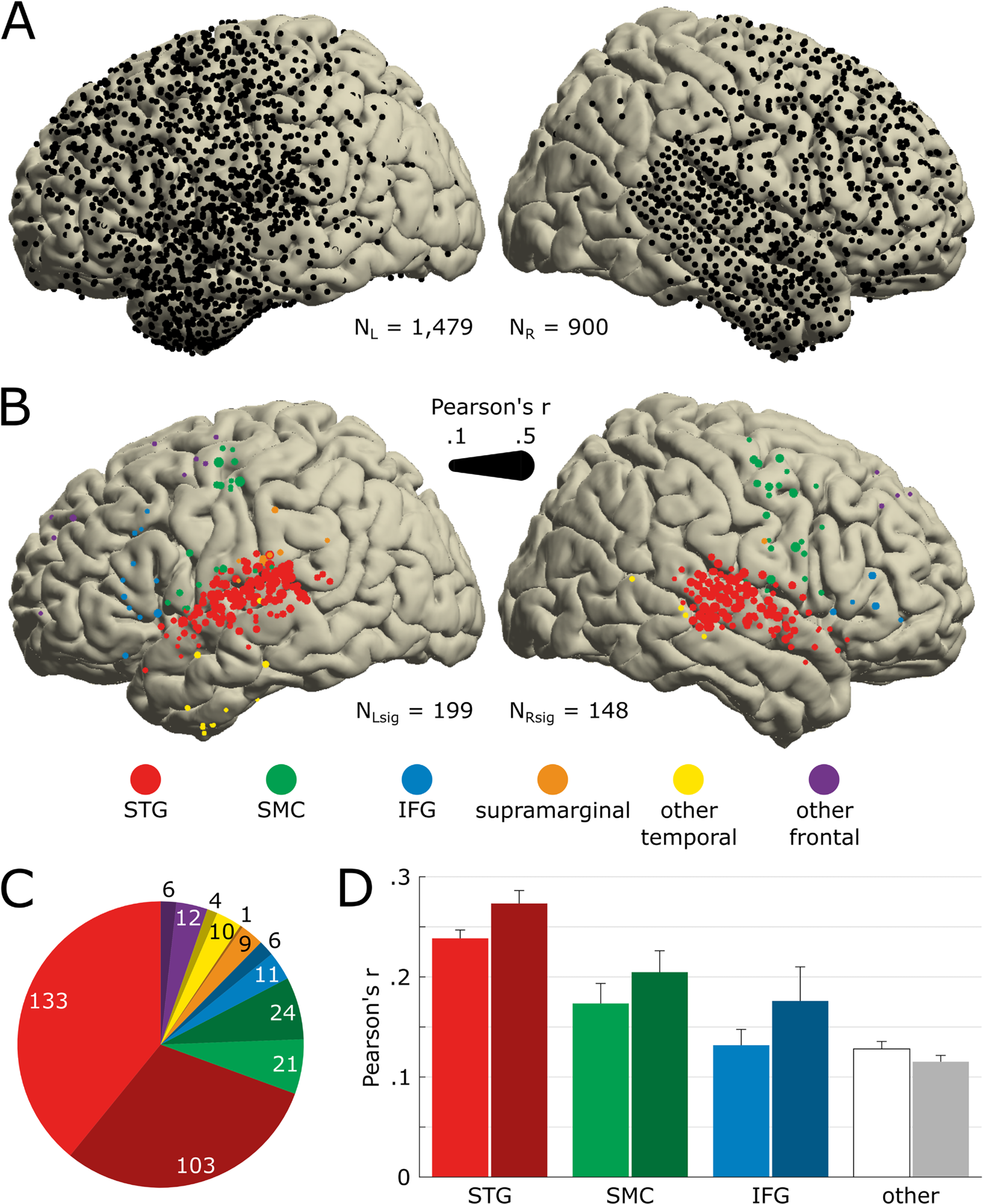

Anatomical location of song-responsive electrodes.(A) Electrode coverage across all 29 patients shown on the MNI template (N = 2,379). All presented electrodes are free of any artifactual or epileptic activity. The left hemisphere is plotted on the left. (B) Location of electrodes significantly encoding the song’s acoustics (Nsig = 347). Significance was determined by the STRF prediction accuracy bootstrapped over 250 resamples of the training, validation, and test sets. Marker color indicates the anatomical label as determined using the FreeSurfer atlas, and marker size indicates the STRF’s prediction accuracy (Pearson’s r between actual and predicted HFA). We use the same color code in the following panels and figures. (C) Number of significant electrodes per anatomical region. Darker hue indicates a right-hemisphere location. (D) Average STRF prediction accuracy per anatomical region. Electrodes previously labeled as supramarginal, other temporal (i.e., other than STG), and other frontal (i.e., other than SMC or IFG) are pooled together, labeled as other and represented in white/gray. Error bars indicate SEM. The data underlying this figure can be obtained at https://doi.org/10.5281/zenodo.7876019. HFA, high-frequency activity; IFG, inferior frontal gyrus; MNI, Montreal Neurological Institute; SEM, Standard Error of the Mean; SMC, sensorimotor cortex; STG, superior temporal gyrus; STRF, spectrotemporal receptive field. https://doi.org/10.1371/journal.pbio.3002176.g002

Results

The study results showed that both brain hemispheres were involved in music processing, with the superior temporal gyrus (STG) in the right hemisphere playing a more crucial role in music perception. In addition, even though both temporal and frontal lobes were active during music perception, a new STG subregion tuned to musical rhythm.

Data from 347 electrodes of ~2,700 ECoG electrodes helped the researchers detect music encoding. The data showed that both brain hemispheres were involved in music processing, with electrodes on the right hemisphere more actively responding to the music than the left hemisphere (16.4% vs. 13.5%), a finding in direct contrast with speech. Notably, speech evokes more significant responses in the left brain hemisphere.

However, in both hemispheres, most electrodes responsive to music were implanted over a region called the superior temporal gyrus (STG), suggesting it likely played a crucial role in music perception. STG is located just above and behind the ear.

Furthermore, the study results showed that nonlinear models provided the highest decoding accuracy, r-squared of 42.9%. However, adding electrodes beyond a certain amount also diminished decoding accuracy; thus, removing 43 right rhythmic electrodes reduced decoding accuracy.

The electrodes included in the decoding model had unique functional and anatomical features, which also influenced the model's decoding accuracy.

Finally, regarding the impact of the dataset duration on decoding accuracy, the authors noted that the model attained 80% of the maximum observed decoding accuracy in 37 seconds. This finding emphasizes using predictive modeling approaches (as used in this study) in small datasets.

The study data could have implications for brain-computer interface (BCI) applications, e.g., communication tools for people with disabilities having compromised speech. Given BCI technology is relatively new, available BCI-based interfaces generate speech with an unnatural, robotic quality, which might improve with the incorporation of musical elements. Furthermore, the study findings could be clinically relevant for patients with auditory processing disorders.

Conclusion

Our results confirm and extend past findings on music perception, including the reliance of music perception on a bilateral network with a right lateralization. Within the spatial distribution of musical information, redundant and unique components were distributed between STG, middle temporal gyrus (SMC), and inferior frontal gyrus (IFG) in the left hemisphere and concentrated in STG in the right hemisphere, respectively.

Future research could target extending electrode coverage to other cortical regions, varying the nonlinear decoding models' features, and even adding a behavioral dimension.