In a recent study posted to the arXiv preprint* server, a large team of Google engineers and researchers presented a large language model (LLM) agent system called Personal Health Insights Agent or PHIA that can use information retrieval tools and advanced code generation methods to analyze and infer the data on behavioral health acquired from wearable health trackers.

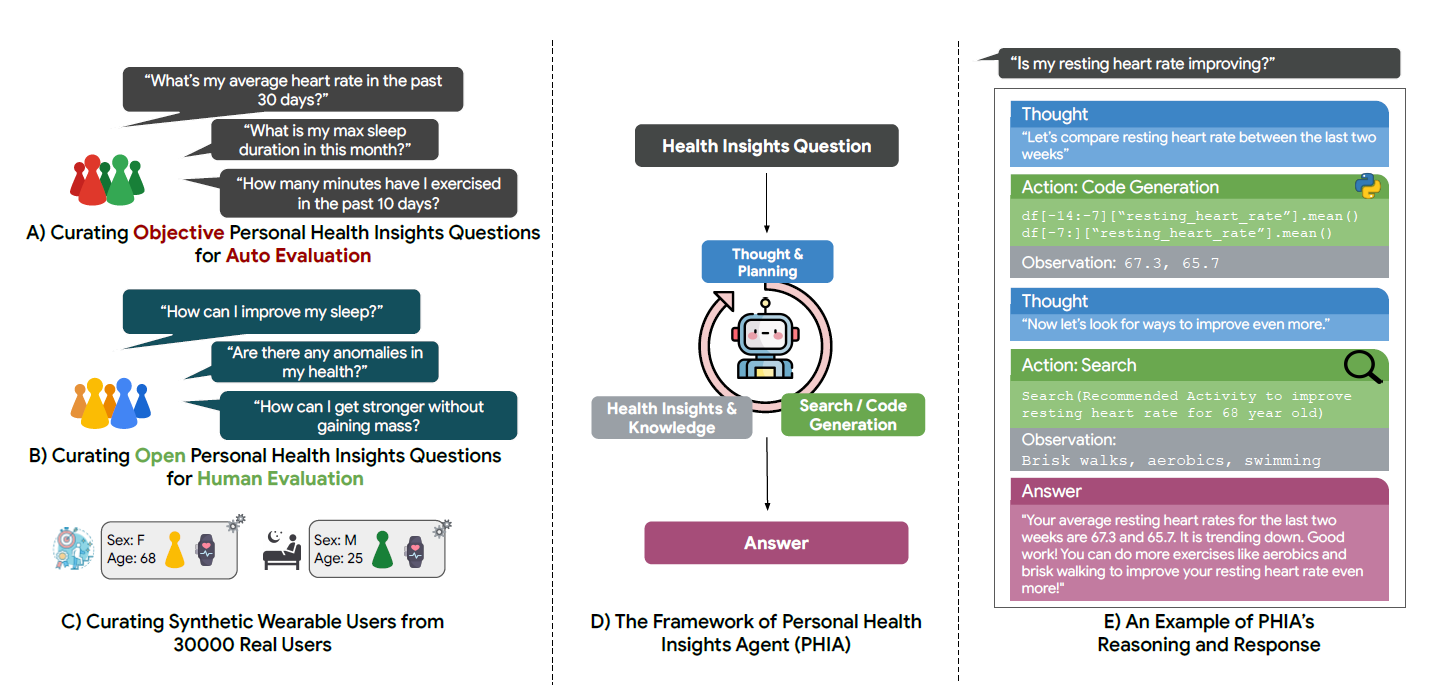

An overview of thePersonal Health Insights Agent (PHIA). (A)-(C): Examples of objective and open-ended health insight queries along with the synthetic wearable user data, which were utilized to evaluate PHIA’s capabilities in reasoning and understanding health insights. (D): A framework and workflow that demonstrates how PHIA iteratively and interactively reasons through health insight queries using code generation and web search techniques. (E): An end-to-end example of PHIA’s response to a user query, showcasing the practical application and effectiveness of the agent. Transforming Wearable Data into Health Insights using Large Language Model Agents

An overview of thePersonal Health Insights Agent (PHIA). (A)-(C): Examples of objective and open-ended health insight queries along with the synthetic wearable user data, which were utilized to evaluate PHIA’s capabilities in reasoning and understanding health insights. (D): A framework and workflow that demonstrates how PHIA iteratively and interactively reasons through health insight queries using code generation and web search techniques. (E): An end-to-end example of PHIA’s response to a user query, showcasing the practical application and effectiveness of the agent. Transforming Wearable Data into Health Insights using Large Language Model Agents

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as conclusive, guide clinical practice/health-related behavior, or treated as established information.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as conclusive, guide clinical practice/health-related behavior, or treated as established information.

Background

The advances in wearable health tracking technology have helped gather longitudinal, continuous, and multi-dimensional data on behavior and physiology outside the clinical setting. Studies monitoring sleep patterns and physical activity levels have further highlighted the importance of data derived from wearables in gathering personalized insights on health and using this understanding to promote positive behaviors to reduce the risk of diseases.

However, despite the abundance of wearable data, the lack of clinical supervision during the gathering of the data, and the inability of users to seek help from experts to interpret this data have restricted their ability to gain personalized insights that can be converted into suitable wellness regimens.

Recent studies on machine learning models have shown that LLMs have displayed accuracy and efficiency in tasks such as medical education, question-answering, mental health interventions, and the analysis of electronic health records. A combination of these LLMs with other software tools can be used to develop LLM-based agents that can dynamically interact with the world and obtain insights from wearables' personal health data.

About the study

In the present study, the researchers described the Personal Health Insights Agent (PHIA), the first LLM-based agent for interpreting and deriving insights from personal health data obtained from wearable health trackers.

PHIA uses the ReAct agent framework, which can autonomously perform functions and incorporate observations about these autonomous functions into decision-making. Using advanced code generation methods, integrated web search, and the ReAct agent framework, PHIA is designed to help answer numerous real-world questions about health.

The study also conducted a time-intensive human evaluation involving 19 human annotators of over 6,000 model responses and an automatic evaluation of twice the number of model responses to show that the LLM-based agent exhibited superior reasoning on longitudinal behavioral health data. They also showed that PHIA could provide deep insights into health interpretations and compared its performance to that of text-only numerical reasoning tools and LLM-based non-agent tools.

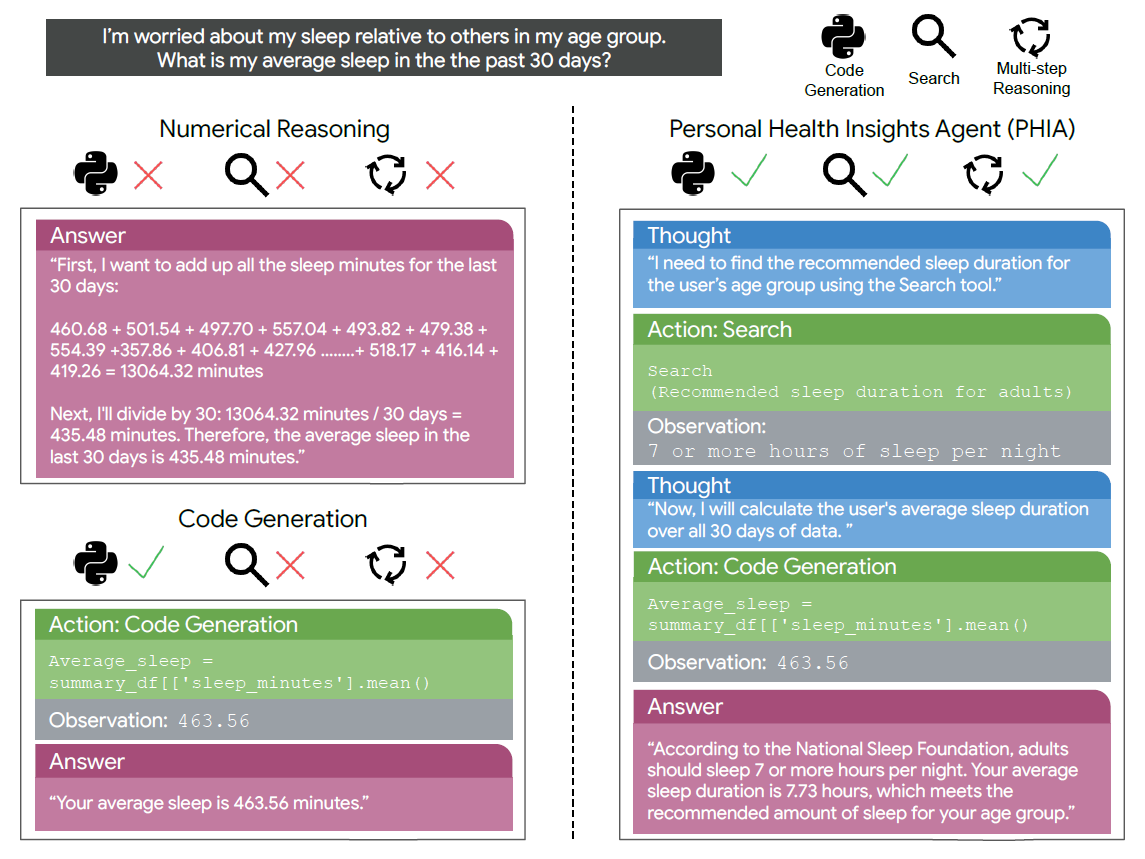

Baseline Comparison. Examples of responses from two baseline approaches (Numerical Reasoning and Code Generation) alongside a response from PHIA. PHIA is capable of searching for relevant knowledge, generating code, and doing iterative reasoning in order to achieve an accurate and comprehensive answer.

Two language model baselines, code generation and numerical reasoning, were used to compare and evaluate PHIA's performance. To evaluate PHIA's ability for open-ended reasoning, the study included 12 independent human annotators experienced in analyzing wearable data on fitness and sleep patterns. The annotators evaluated the quality of reasoning provided by PHIA on the open-ended queries.

They were also tasked with determining whether the model responses utilized relevant data, interpreted the question accurately, incorporated domain knowledge, used correct logic, excluded harmful content, and provided clear communication on personalized insights.

Results

The findings showed that PHIA demonstrated iterative capabilities and the ability to interactively use reasoning and planning tools to analyze personal health data and provide interpretations. Compared to the two baselines, code generation and numerical reasoning, PHIA's performance in providing objective insights on personal health queries was 14% and 290% greater, respectively.

Additionally, for open-ended, complex queries, the expert human annotators reported that PHIA performed significantly better than the baselines in health insight reasoning and interactive analysis of health data. Given PHIA's ability to function fully automatedly with no supervision, this LLM-based agent can analyze personal health data from wearables with just some advanced planning, interactions with web search, and iterative reasoning options.

The human and automatic evaluation also revealed that PHIA was able to provide accurate answers to more than 84% of the factual numerical queries and over 83% of the open-ended questions that were crowd-sourced. The study showed that this LLM-based agent could potentially help individuals interpret personal health data from their wearables and use these insights to develop personalized health regimens.

Conclusions

To summarize, the study showed that the LLM-based agent PHIA performed better than established baselines in using tools and iterative reasoning to analyze personal health data from wearables and provide accurate responses to factual numerical queries and open-ended questions. With the integration of advanced LLM models and knowledge from medical domains, the researchers believe that the applications of LLM-based agents in personal health can grow substantially.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as conclusive, guide clinical practice/health-related behavior, or treated as established information.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as conclusive, guide clinical practice/health-related behavior, or treated as established information.

Journal reference:

- Preliminary scientific report.

Transforming Wearable Data into Health Insights using Large Language Model Agents. Mike A. Merrill, Akshay Paruchuri, Naghmeh Rezaei, Geza Kovacs, Javier Perez, Yun Liu, Erik Schenck, Nova Hammerquist, Jake Sunshine, Shyam Tailor, Kumar Ayush, Hao-Wei Su, Qian He, Cory Y. McLean, Mark Malhotra, Shwetak Patel, Jiening Zhan, Tim Althoff, Daniel McDuff, and Xin Liu. arXiv:2406.06464, DOI: 10.48550/arXiv.2406.06464, https://arxiv.org/abs/2406.06464