Their findings indicate that the developed Convolutional Long Short-Term Memory (ConvLSTM) model can accurately predict health risks with an impressive 99.89% accuracy, which could enhance early detection and improve patient outcomes in hospital settings.

Study: AI-Based Visual Early Warning System

Study: AI-Based Visual Early Warning System

Background

Facial expressions are crucial to human communication, conveying emotions and non-verbal cues across different cultures. Charles Darwin first explored the idea that facial movements reveal emotions, and later research by Ekman and others identified universal facial expressions linked to specific emotions.

The Facial Action Coding System (FACS), developed by Ekman and Friesen, became a vital tool in studying these expressions by analyzing the muscle movements involved. Over time, the study of facial expression recognition (FER) has expanded into areas like psychology, computer vision, and healthcare.

Various models and databases have been created to improve the automatic detection of facial expressions, particularly in clinical settings. Recent advancements include the use of Convolutional Neural Networks (CNNs) and other machine learning techniques to recognize facial expressions and predict health conditions.

These developments are especially valuable in healthcare, where accurate recognition of emotions like pain, sadness, and fear can help in the early detection of patient deterioration, improving care and outcomes.

About the study

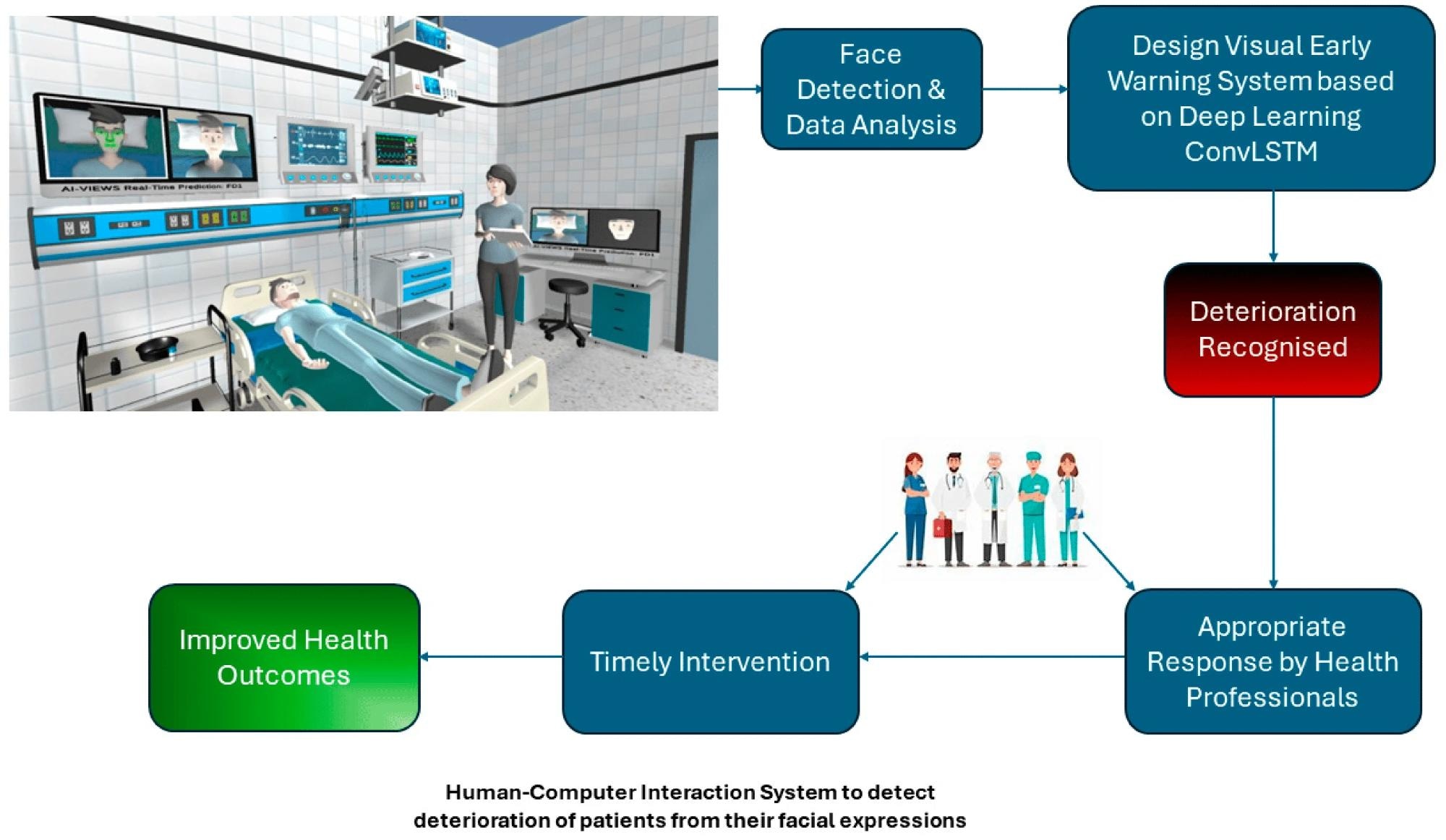

The study employed a systematic methodology to develop and evaluate a Convolutional Long Short-Term Memory (ConvLSTM) model for recognizing facial expressions, particularly those indicating patient deterioration. The process involved three key phases: generating the dataset, pre-processing the data, and implementing the ConvLSTM model.

First, a dataset of three-dimensional animated avatars displaying various facial expressions was generated using advanced tools. These avatars were designed to mimic the faces of real humans with diverse characteristics such as age, ethnicity, and facial features.

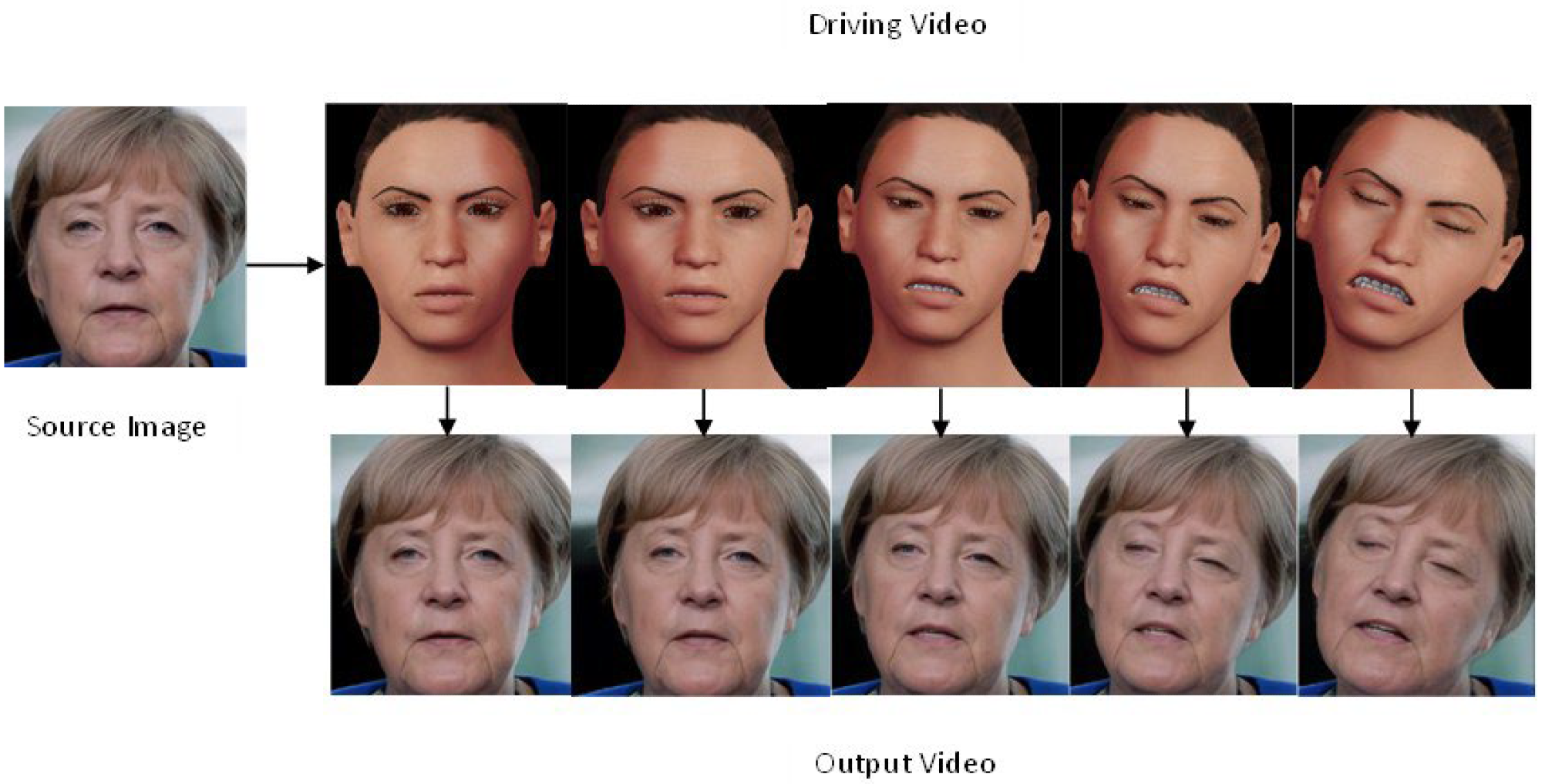

Each avatar performed specific expressions related to health deterioration, resulting in 125 video clips. The First-Order Motion Model (FOMM) was then used to transfer these expressions to static images from an open-source database, expanding the dataset to 176 video clips.

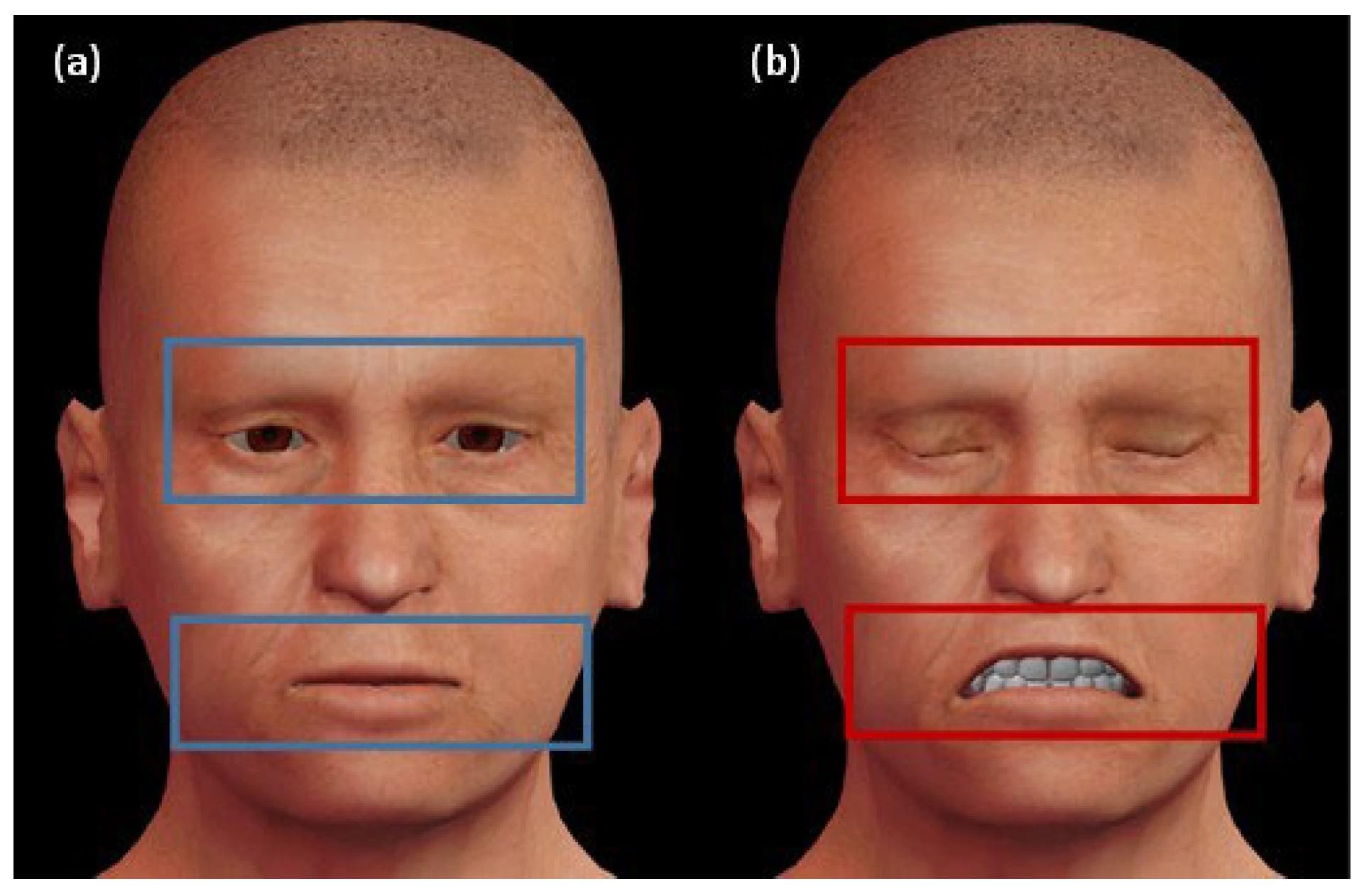

Facial expression areas that reveal if the patient is under deterioration or not. (a) The left avatar expresses a neutral expression, which is bounded by the blue rectangles. (b) The right avatar reveals deterioration status in the final stage, which is bounded by the red rectangles.

Facial expression areas that reveal if the patient is under deterioration or not. (a) The left avatar expresses a neutral expression, which is bounded by the blue rectangles. (b) The right avatar reveals deterioration status in the final stage, which is bounded by the red rectangles.

Next, the dataset underwent rigorous pre-processing, which included face detection to isolate and focus on the facial regions. The dataset was split into training (85%), and test (15%) sets and oversampling techniques were applied to balance the training data.

Finally, the ConvLSTM model, which integrates convolutional layers with LSTM cells, was proposed and implemented to capture both spatial and temporal dependencies in the video sequences, enabling accurate prediction of facial expressions over time.

Frames of video sample after utilizing FOMM to transfer facial expressions from avatars to real facial images.

Frames of video sample after utilizing FOMM to transfer facial expressions from avatars to real facial images.

Findings

The study introduced a model designed to detect specific facial expressions associated with patient deterioration. This model was trained and tested on a dataset generated from avatars, which simulated five facial expression classes. These expressions were crafted to represent a diverse range of facial landmarks, skin tones, and ethnicities, mimicking patients at risk of clinical decline.

The model's performance was evaluated using several key metrics. It achieved high accuracy (99.8%), precision (99.8%), and recall (99.8%), demonstrating the model's effectiveness in correctly identifying the relevant facial expressions.

The accuracy measures how often the model correctly predicts expressions, while precision and recall focus on the model's ability to correctly identify positive expressions without false alarms.

The ConvLSTM model proved superior to other methods that were also used. It excelled at recognizing patterns in facial expressions over time, which is crucial for accurately assessing patient conditions.

Further analysis included the use of a confusion matrix and Receiver Operating Characteristic (ROC) curves to evaluate model performance, especially in handling imbalanced datasets. The model was also tested on unseen data, showing consistent accuracy across different classes of expressions.

These results suggest that the ConvLSTM model is highly effective in predicting the deterioration of patients based on their facial expressions, though the authors acknowledge the need for future studies involving real patient data to validate these findings in practical scenarios.

Conclusions

The study developed a deep learning-based model using the ConvLSTM architecture to detect facial expressions indicative of patient deterioration, achieving a high accuracy of 99.89%.

The model was trained on a synthetic dataset representing five specific facial expressions associated with deterioration risk. The system showed promising results, highlighting the potential of using advanced computer vision and machine learning techniques for early detection of patient decline.

However, a significant limitation of the study is the reliance on synthetic data instead of real-life patient data. Ethical concerns prevented the collection of actual patient data, particularly from critical care and intensive care units.

Researchers concluded by highlighting the need for future work involving real-world data to further validate the model and integrate it with other medical assessment systems to improve patient outcomes.

Journal reference:

- AI-Based Visual Early Warning System. Al-Tekreeti, Z., Moreno-Cuesta, J., Garcia, M.I.M., Rodrigues, M.A. Informatics (2024). DOI: 10.3390/informatics11030059, https://www.mdpi.com/2227-9709/11/3/59