Since SARS-CoV-2 infection could cause both symptomatic and asymptomatic manifestations, it is important to develop accurate tests to avoid general population quarantine. Previous studies have revealed that AI-based classifiers trained with respiratory audio data could identify SARS-CoV-2 status.

Although these studies indicated the effectiveness of AI-based classifiers, many challenges surfaced while applying them in real-world settings. Some factors that withheld AI-based classifier applications were sampling biases, unvalidated data on participants' COVID-19 status, and delay between infection and audio recording. It is imperative to determine whether the audio biomarkers of COVID-19 are unique to SARS-CoV-2 infection or are inappropriate confounding signals.

About the Study

The current study focussed on determining whether audio-based classifiers can be accurately used for COVID-19 screening. A large-scale polymerase chain reaction (PCR) dataset linked to audio-based COVID-19 screening (ABCS) was used. For this study, participants of the Real-time Assessment of Community Transmission (REACT) program and the National Health Service (NHS) Test-and-Trace (T+T) service were invited. All relevant demographic data was extracted from T+T/REACT records.

Participants were asked to complete survey questions and record four audio clips. For audio recordings, they were asked to read a specific sentence, followed by three successive exhalations, making a "ha" sound. Furthermore, the participants were asked to record forced coughs once and three times in succession. All recordings were documented in .wav format. The quality of the audio recordings was assessed, and 5,157 records were removed for quality-related issues.

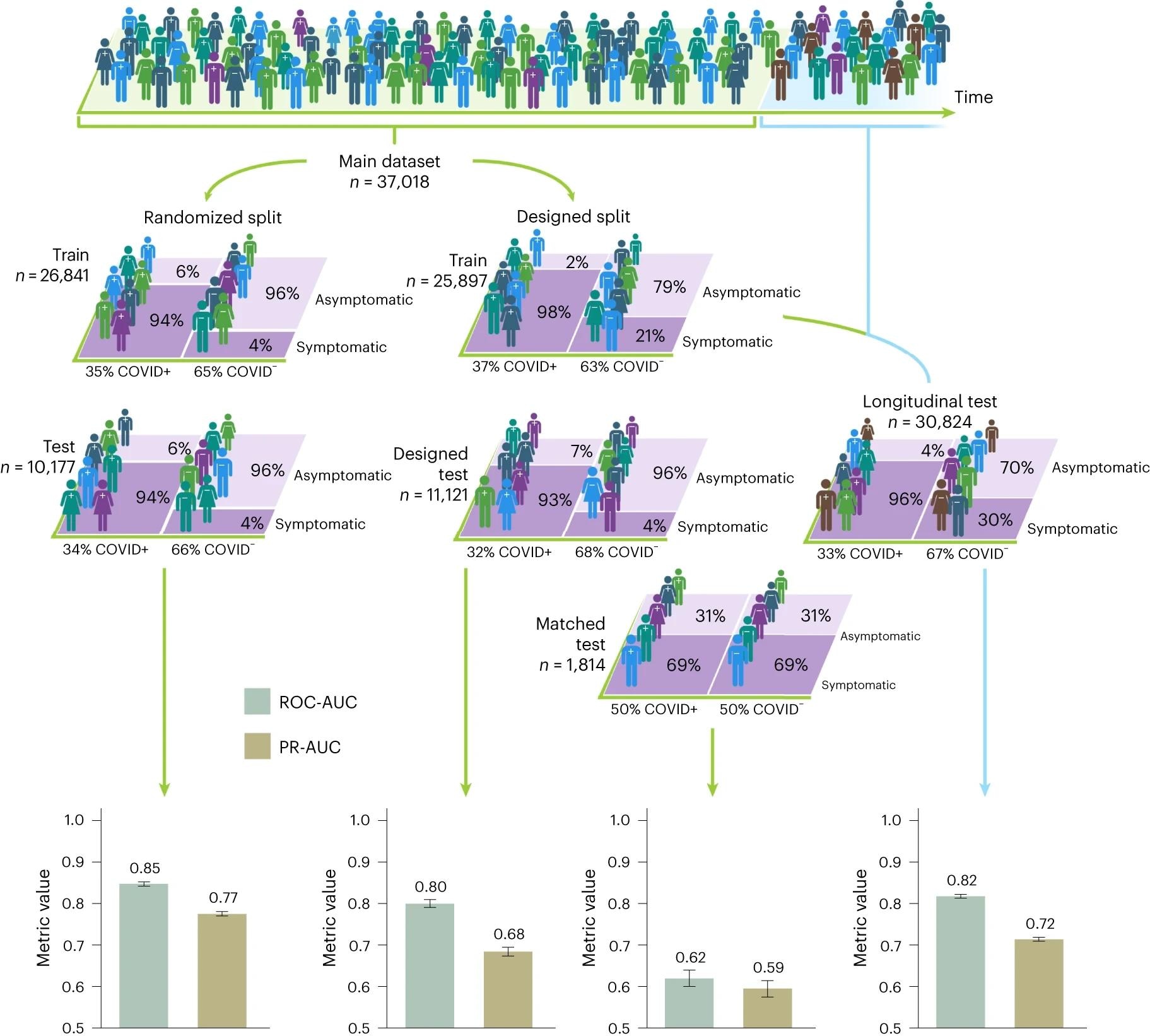

Human figures represent study participants and their corresponding COVID-19 infection status, with the different colours portraying different demographic or symptomatic features. When participants are randomly split into training and test sets, the randomized split models perform well at COVID-19 detection, achieving AUCs in excess of 0.8; however, matched test set performance is seen to drop to estimated AUC between 0.60 and 0.65, with an AUC of 0.5 representing random classification. Inflated classification performance is also seen in engineered out of distribution test sets such as: the designed test set, in which a select set of demographic groups appear solely in the testing set, and the longitudinal test set, in which there is no overlap in the time of submission between train and test instances. The 95% confidence intervals calculated via the normal approximation method are shown, along with the corresponding n numbers of the train and test sets.

Human figures represent study participants and their corresponding COVID-19 infection status, with the different colours portraying different demographic or symptomatic features. When participants are randomly split into training and test sets, the randomized split models perform well at COVID-19 detection, achieving AUCs in excess of 0.8; however, matched test set performance is seen to drop to estimated AUC between 0.60 and 0.65, with an AUC of 0.5 representing random classification. Inflated classification performance is also seen in engineered out of distribution test sets such as: the designed test set, in which a select set of demographic groups appear solely in the testing set, and the longitudinal test set, in which there is no overlap in the time of submission between train and test instances. The 95% confidence intervals calculated via the normal approximation method are shown, along with the corresponding n numbers of the train and test sets.

Study Findings

In this study, a respiratory acoustic dataset of 67,842 individuals was collected. Among them, 23,514 individuals tested positive for COVID-19. All data were linked with PCR test results. It must be noted that the most significant number of COVID-19-negative participants were recruited from six REACT rounds compared to the T+T channel.

The dataset considered in this study exhibited promising coverage across England. No significant association between geographical location and COVID-19 status was noted. The highest level of COVID-19 imbalance was found in Cornwall. A previous study indicated recruitment bias in ABCS, particularly linked with age, language, and gender, in both training data and test sets. Despite this bias, the training dataset was balanced in accordance with age and gender across COVID-positive and COVID-negative subgroups.

Consistent with previous studies, the unadjusted analysis conducted in this study exhibited that AI classifiers can predict COVID-19 status with high accuracy. However, when measured confounders were matched, a weak performance of AI classifiers in detecting SARS-CoV-2 status was observed.

Based on the findings, the current study proposed some guidelines to rectify recruitment bias's effect for future studies. Some of the recommendations are listed below:

- Audio samples stored in repositories must include details of the study recruitment criteria. In addition, relevant information about the individuals, including their gender, age, time of COVID-19 test, SARS-CoV-2 symptoms, and locations, must be documented along with the audio recording.

- All confounding factors must be identified and matched to help control recruitment bias.

- Experimental design must be developed, keeping the possible bias in mind. In most cases, data matching leads to a reduction in sample size. Observational studies recruit participants focusing on the maximized possibility of matching measured confounders.

- The predictive values of the classifiers must be compared with standard protocol findings.

- AI classifiers' predictive accuracy must be assessed. However, the predictive accuracy, sensitivity, and specificity vary depending on the targeted population.

- The classifiers' utility must be assessed for each testing outcome.

- The replication study must be conducted in randomized cohorts. Furthermore, pilot studies must be conducted in real-world settings based on domain-specific utility.

Conclusions

The current study has come with limitations that include the possibility of potential unmeasured confounders across REACT and T+T recruitment channels. For instance, PCR testing for COVID-19 was performed several days after self-screening of symptoms. In contrast, PCR tests in REACT were conducted on a pre-determined date, irrespective of the onset of symptoms. Although the majority of confounders were matched, there is a possibility of the presence of residual predictive variation.

Despite the limitations, this study highlighted the need to develop accurate machine-learning evaluation procedures to obtain unbiased outputs. Furthermore, it revealed that confounding factors are hard to detect and control across many AI applications.

Journal reference:

- Coppock, H. et al. (2024) Audio-based AI classifiers show no evidence of improved COVID-19 screening over simple symptoms checkers. Nature Machine Intelligence. 1-14. DOI: 10.1038/s42256-023-00773-8, https://www.nature.com/articles/s42256-023-00773-8