The listening tactic that we investigated is the idea that if you turn your head away from the person that you're talking to, rather than directly facing them, that it would help you to understand what they're saying a little better. At the same time as still looking at them, of course, because you need to be able to lip read.

Our research was inspired by a computer model of how well people understand speech in background noise. That model could produce predictions for any angle. It concluded that the best thing would be to angle your head 65 degrees away from the person that you're talking to. Of course, such a big head turn would remove the benefit of lip reading, because you would not be able to see the person’s lips with a head turn of 65 degrees.

So, the reason why we investigated the more modest head turn of about 30 degrees was because we felt that this was the largest degree of head turn people could do comfortably, whilst counter rotating their eyes so that they could still look at the person they were talking to.

A lot of the people that will be interested in this are the elderly people who are struggling to talk in restaurants, for example and they are also likely to be wearing glasses, in which case you can't see other’s faces after you turn the head much more than 30 degrees.

What did the research involve and did you test the tactic in the laboratory and also in real-world settings?

Our research was all carried out in the laboratory, but we did it in two different ways. We had one which was a very typical laboratory situation, where there was a simple listening task with one source of speech sound and another source of noise, which was carried out in a sound-treated room in order to minimize the number of echoes. This task enabled us to investigate the basic effect, check that it worked, measure how big the effect was and that it conformed to the model predictions.

It was also important at this stage to check that people were able to lip read because a lot of people often think that you can’t lip read once you’ve turned your head away. We thought that you should be able to because you can counter-rotate your eyes, but it's not entirely clear whether that might still affect your ability to pay attention in some way.

Although you're still looking at them, you might somehow not be so able to process the information, so this needed to be checked. Because of this common belief, it was important we provided the evidence that you can lip read just as well when turning your head away by 30 degrees.

That was the simple laboratory situation. We also wanted to know whether it would work in the real world, because in the real world you have a lot more complexity than a laboratory setting. There are sound sources coming from lots of different directions, the location has sound reflecting off the walls in very complex patterns etc.

It might be argued that because you've got sound coming at you from every direction it doesn't matter which way you point your head. We disagree with this because the important sound source that you’re trying to listen to is typically close to you and would still be affected by turning the head, despite the noise coming from everywhere, the target sound is coming from somewhere quite close.

By using virtual acoustics to simulate a complex listening situation in a restaurant, we looked at the effect of turning the head in the real world. We based our measurements on an existing restaurant. For these measurements, we had a mannequin and lots of loudspeakers, the mannequin was placed in a seat and had microphones in its ears. We played sounds from different places in the room and we rotated its head into different directions.

The acoustic measurements were used in order to create a virtual simulation of the restaurant so that it was as realistic as possible. The recording had nine different people talking at once in the restaurant at different places, on top of the person you are trying to listen to. The head was in different positions and the speaker and listener were at six different tables to see whether it worked for every listening position.

It's played back to people wearing headphones, but they feel as though they're in the restaurant. They have all this noise around them and every now and again someone from across the table says a sentence to them that they have to transcribe.

We found that, although the effects were smaller than the previous experiment, there was still a substantial amount of improvement to people understanding speech in background noise. Therefore, consistently turning the head to one side was beneficial.

Did the listeners in your study all have cochlear implants?

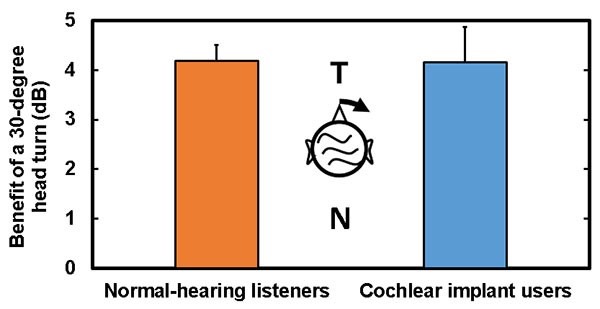

The study with the staged restaurant setting was only done in normally hearing listeners, but the laboratory-style study was done with people that had cochlear implants as well as people with normal hearing, so we've got data from both those groups.

What were your main findings?

The main findings were that the head rotation does improve speech intelligibility when background noise is present. However, it depends on the exact situation, in an idealized situation where you've got one speech sound source and one noise sound source and you can turn your head as much as you want, you can get as much as 10 dB benefit by turning your head around. But if you limit the head turn to 30 degrees so that you can still get lip reading benefit, then we get a benefit of about 4 dB signal-to-noise ratio.

If we look at the more complex listening situation where there are sounds all over the place and reflections are coming off walls and so on, similar to the one simulated in our virtual restaurant experiment, then it's about 1 dB, but that still makes a substantial difference to intelligibility, so it's a worthwhile tactic to adopt.

What does a 4-decibel improvement mean?

A 4 dB improvement may sound small because you may have heard of people having hearing losses of 50 dB or 60 dB, but they're quite different things. It's a very different scale when you're talking about signal-to-noise ratios compared to talking about absolute thresholds. A 4 dB improvement in signal-to-noise ratio can be the difference between understanding absolutely everything in a sentence and understanding nothing at all.

At the cusp of understanding, 1 dB is about a 15% improvement in intelligibility, so in this context it's quite large. Enough to help you follow a conversation where before you weren't able to follow it.

When people have a hearing loss of 50 or 60 dB, that's not referring to their ability to deal with background noise, it's their ability to hear extremely quiet sounds. Their threshold for detecting very quiet sounds is 50 or 60 dB higher than it is for a normally hearing listener. That doesn't necessarily mean that once the noise is added that there's so much of a difference.

4 dB is also, coincidentally, about the size of deficit that you normally have in hearing impaired listeners in their ability to deal with speech and background noise. If you measure the lowest level at which they can understand speech in background noise, they need speech to be 4 dB higher compared to the noise than a normally hearing listener for them to understand it.

How much is known about the underlying mechanism behind why it is better to have a clear signal in one ear rather than a mediocre signal in both?

It is not completely understood why this is, but it's been known for some time and this theory is consistent with a lot of experimental evidence. Most people who theorize about how people understand speech in background noise take account of the better ear signal-to-noise ratio.

Apparently the other ear doesn't matter too much. You do need the other ear because it's used for a secondary process called binaural masking, which helps you a little bit extra, but the signal-to-noise ratio at that ear is not so important.

How was the study funded?

It was funded by Action on Hearing Loss, who paid for a PhD studentship for Jacques Grange. He conducted most of the research.

What further research is in the pipeline?

We're interested to know about people with hearing impairments. Usually hearing impairments are associated with old age, so we want to confirm that turning the head improves speech intelligibility for them as well.

It is possible to doubt that they would, because these effects on signal-to-noise ratio mainly occur at high frequencies and high frequencies are the frequencies that hearing impaired people typically lose. And so it might be presumed that it won't work as well for them. However, we believe that it still should in most cases and that even where it doesn't, using a hearing aid should restore the effect.

Our reasoning is that, since we're concerned with signal-to-noise ratio and not with the absolute level of the sounds, the fact that you're hearing impaired and you hear high frequency sounds as being quieter doesn't change their signal-to-noise ratio. You still hear the signal above the noise if it's intense enough. It isn't until the sounds are so attenuated by your hearing impairment that you can't hear them at all, that you lose benefits of improved signal-to-noise ratio.

It's been suggested, for instance, that hearing impairments have to exceed 50 or 60 dB before you lose these sorts of benefits. That's one reason why we think that most hearing impaired people should still be able to benefit from this tactic.

If the high-frequency hearing impairment is more than 50-60 dB, a hearing aid will amplify those high frequencies and make them audible again, in which case it should restore the effect. Once again, these things need to be demonstrated formally, so we are moving on to that now.

Where can readers find more information?

About Prof. John Culling

John Culling was trained at Sussex University and after post-doctoral spells at the MRC Institute of Hearing Research, at Oxford and at Boston Universities, he moved to Cardiff, where he has taught Psychology for nearly 20 years.

John Culling was trained at Sussex University and after post-doctoral spells at the MRC Institute of Hearing Research, at Oxford and at Boston Universities, he moved to Cardiff, where he has taught Psychology for nearly 20 years.

His research on hearing has always had the “cocktail party problem” as its core focus. This problem concerns understanding how listeners are able to identify sounds, especially speech sounds, in background noise. This ability is remarkable, because the sound of the attended voice at the listener’s two ears may be well below the level of interfering noise.

The answers to this question have important practical ramifications, since hearing impaired listeners often find a single voice intelligible when amplified, but find any interfering sound intolerable.

In very noise environments normally-hearing listeners will also struggle, especially in reverberant rooms. By investigating the perceptual mechanisms which underlie these effects, he aims to uncover principles which could guide the design of hearing-aids, cochlear implants and, indeed, rooms so that they facilitate rather than impede communication in noise.