Their findings indicate that integrating emotional features, particularly negative emotions, into machine learning models significantly enhances the accuracy and reliability of fake news detection on social media platforms.

Study: Emotions unveiled: detecting COVID-19 fake news on social media. Image Credit: voyata / Shutterstock

Study: Emotions unveiled: detecting COVID-19 fake news on social media. Image Credit: voyata / Shutterstock

Background

It is well established that social media significantly impacts various aspects of human life, offering benefits like connectivity and information sharing and presenting dangers such as spreading fake news.

The research highlighted that fake news undermines public trust, democracy, and economic stability. Notable incidents during the 2016 United States presidential election and the coronavirus disease 2019 (COVID-19) pandemic illustrate its serious consequences.

Although previous studies examined cognitive biases and the structural aspects of social media that facilitate the spread of fake news, as well as various machine learning techniques for detecting it, there was a notable gap in understanding the role of emotions in fake news detection.

About the study

This study aimed to explore how emotional features, particularly negative emotions, could enhance the accuracy of machine learning models in detecting fake news. Researchers focused on detecting fake news by analyzing the sentiments and emotions expressed in tweets during the COVID-19 pandemic.

They used a dataset of 10,700 English tweets, categorized as real or fake, compiled in a previous study. The tweets were sourced from public fact-checking websites and social media, ensuring a balanced dataset with 5,600 real news stories and 5,100 fake news stories.

The preprocessing steps involved cleaning the text by removing non-alphabetic characters, converting to lowercase, removing stop words, and lemmatizing. The sentiment analysis employed three lexicons that identified sentiments (positive, negative, neutral) within the tweets.

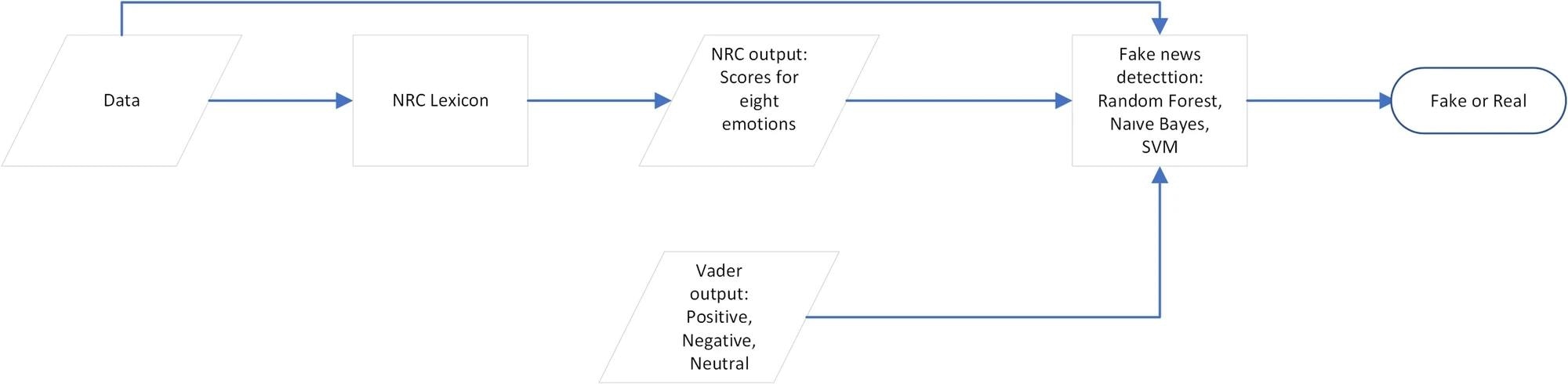

The emotion lexicon was used to extract eight basic emotions (anticipation, anger, joy, sadness, surprise, fear, trust, and disgust) from the tweets. These emotional features were then incorporated into various machine learning models—random forest, support vector machine (SVM), and naïve Bayes—as well as a deep learning model called bidirectional encoder representations from transformers (BERT) to classify the tweets as real or fake.

The dataset was split into training (80%) and testing (20%) sets. The models' performance was evaluated with and without including emotional features.

The figure exhibits a schematic explanation of the model.

Findings

The study revealed critical insights into the role of sentiment and emotion in fake news detection during the COVID-19 pandemic.

Sentiment analysis showed that fake news tweets had more negative sentiments (39.31%) compared to positive sentiments (31.15%), while real news tweets exhibited the opposite trend, with more positive sentiments (46.45%) than negative sentiments (35.20%).

Emotion analysis using the emotion lexicon indicated that fake news predominantly elicited negative emotions such as anger, disgust, and fear. In contrast, real news was associated with more positive emotions like surprise, joy, and anticipation.

Significant differences were observed for emotions like trust, fear, and anticipation, with trust being more prevalent in real news and fear being more intense in fake news.

When emotion features were incorporated into the machine learning models, including random forest, SVM, and BERT, they demonstrated improved performance in detecting fake news.

The random forest model, for instance, highlighted fear, anticipation, and trust as the most significant features for distinguishing between real and fake news.

The BERT model, optimized through a five-fold cross-validation and trained over three epochs, also showed enhanced accuracy with the addition of emotion features.

Overall, the study confirmed that emotions, particularly negative ones, play a crucial role in the spread of fake news, and incorporating these emotional features into detection models significantly boosts their effectiveness.

Conclusions

This study highlights the crucial role of emotions in detecting fake news, particularly during the COVID-19 pandemic.

Overall, the results showed that incorporating emotional data significantly improved the accuracy and reliability of fake news detection.

The lexicon that identified negative sentiments was particularly effective, indicating that fake news tweets generally contained more negative sentiments and emotions compared to real news tweets.

Another notable finding was that fake news predominantly elicits negative emotions like fear, anger, and disgust, while real news evokes positive emotions such as anticipation, joy, and trust.

These findings align with previous studies that have also noted the prevalence of negative emotions in fake news.

The study's strength lies in its detailed analysis of emotional intensities and incorporating these features into machine learning models, enhancing their accuracy.

However, limitations include the dataset's restricted timeframe and the potential for broader generalization with more extensive data.

Future research should explore emotional dynamics in different crises and enhance detection models using pre-trained emotion features. This interdisciplinary approach can significantly contribute to understanding and mitigating fake news across various domains.