Innovative Approach to Aging and Disease Prediction

In a recent study published in the journal Proceedings of the National Academy of Sciences, a team of scientists from China developed a multimodal method using an image Transformer system that uses tongue, retinal, and facial images and estimates biological age, which can be used to predict the risk of chronic diseases related to age.

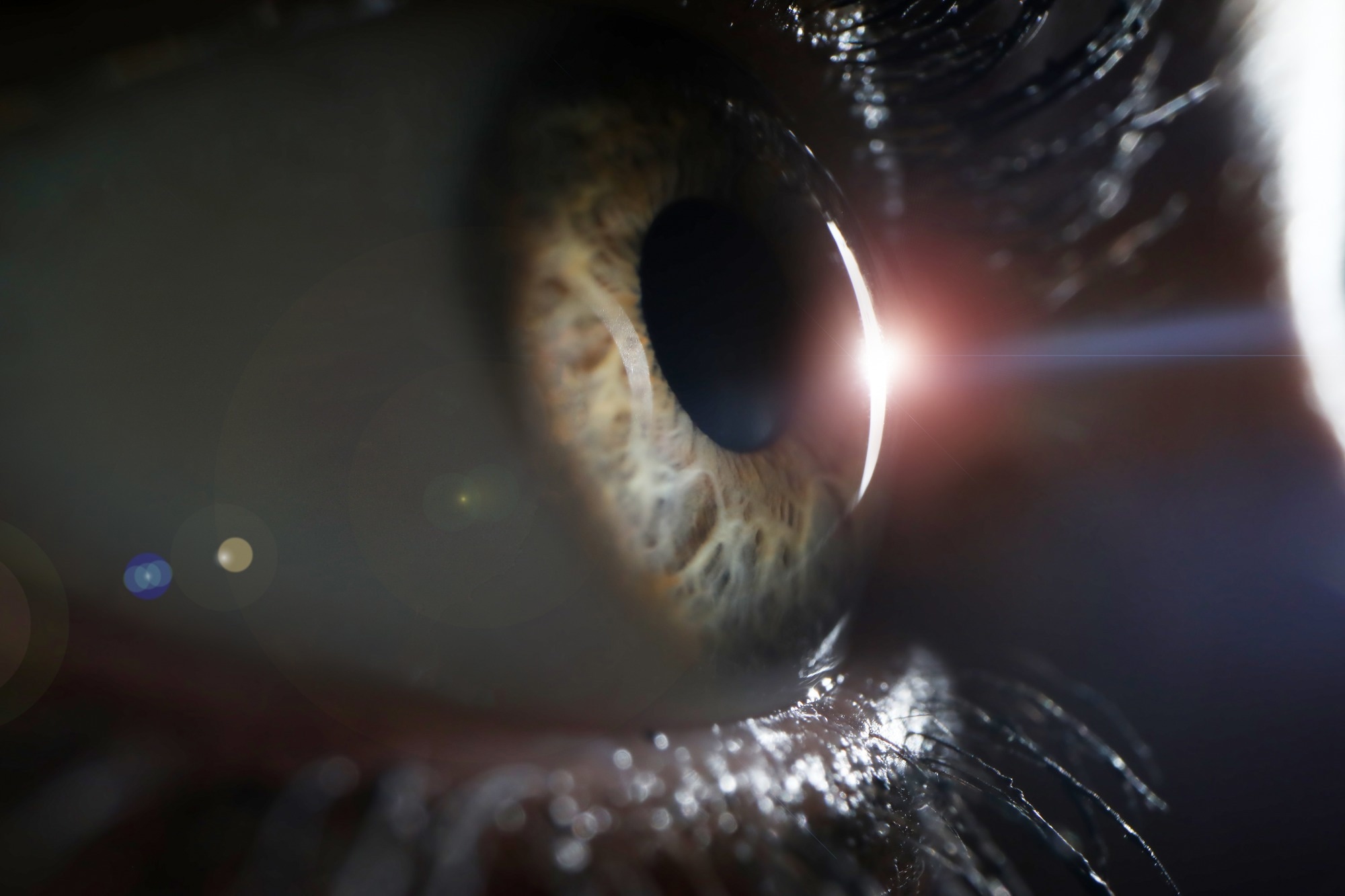

Study: Accurate estimation of biological age and its application in disease prediction using a multimodal image Transformer system. Image Credit: H_Ko / Shutterstock

Study: Accurate estimation of biological age and its application in disease prediction using a multimodal image Transformer system. Image Credit: H_Ko / Shutterstock

Background on Biological Age and Chronic Diseases

The identification and standardization of markers that can be used to predict the risk of chronic diseases related to aging and their clinical use for managing the health of the aging population has thus far proven difficult due to the heterogeneity in tissues and organs. Time is not the only determining factor in many chronic diseases associated with various organ systems. However, biological age, a marker of chronological aging, is determined based on the functional and structural changes that occur during aging.

Environmental and genetic factors can be responsible for these changes, and a reliable method for determining biological age is clinically important to predict the risk of numerous age-related diseases and ensure early intervention. Artificial intelligence (AI) has recently been used to identify biomarkers such as retinal age, brain image-derived brain age, facial age, and epigenetic clock based on deoxyribonucleic acid (DNA) methylation patterns.

Study Methodology and AI Model Development

In the present study, the researchers developed an AI-based modeling system that uses tongue, retinal fundus, and facial images to estimate biological age and obtain information on and predict the risk of chronic, organ-specific diseases. The optic nerve contains axons derived from the central nervous system, which makes retinal images a potential indicator of brain health. Furthermore, microbiome exposure deduced from tongue images can be an indicator of the health of the oral cavity and the gastrointestinal tract.

The AI tool is based on a Transformer-based architecture, and facial, tongue, and retinal images from a group of healthy participants were used to train and validate the model. Subsequently, images from participants who had various chronic diseases or known risk factors for chronic diseases were used to test the model. These images were used to understand how various lifestyle factors and chronic diseases would impact biological age.

The Transformer-based AI model uses a cross-attention module to estimate biological age using combined information from retinal fundus, facial, and tongue images. Linear projection modules were initially used to process these images and construct classification and image tokens that correspond to these images. These tokens were then used as the input data for the multimodal Transformer architecture, which is optimized through a backpropagation algorithm that uses the loss function between chronological age and predicted biological age.

The training dataset was obtained from participants who were longitudinally followed for health checks. Three-dimensional (3D) scanning of the face, retina, and tongue and relevant medical information were obtained from the participant's medical records and blood tests using fasting blood samples. Other metadata for the study consisted of lifestyle factors such as alcohol use and smoking, demographic information, and information gathered from clinical laboratory tests and physical examinations. Images from a second cohort of participants were used as the independent validation cohort.

Results from the AI-Based Biological Age Prediction

The study reported that images of the tongue, retina, and face from over 11,000 healthy participants were used to train the AI-based model to predict biological age, while images from close to 3000 participants with six major chronic diseases were used as the test dataset. The significant difference between the healthy and diseased cohorts in the AgeDiff, defined as the difference between chronological and predicted biological ages, was calculated.

AgeDiff was found to be a reliable marker to be used independently or in conjunction with other known risk factors to stratify the population and predict the progression of age-related chronic diseases. The researchers believe their multimodal AI-based biological age prediction tool was more accurate than other biological age prediction tools that used phenotypic data, epigenetic clocks based on DNA methylation information, blood profiles, or transcriptome aging clocks.

The validated AI-based model showed a robust ability to detect the progressive changes associated with aging and exhibited accurate biological age-predicting abilities. The results also indicated that individuals with chronic diseases exhibit several deviations associated with AgeDiff that can be used to detect and assess the progression of chronic diseases.

Implications of the Study

Overall, the findings indicated that the Transformer-based multimodal AI tool that uses facial, tongue, and retinal fundus images can accurately detect and predict the progression of age-related chronic diseases.

Journal reference:

- Wang, J., Gao, Y., Wang, F., Zeng, S., Li, J., Miao, H., Wang, T., Zeng, J., Baptista-Hon, D., Monteiro, O., Guan, T., Cheng, L., Lu, Y., Luo, Z., Li, M., Zhu, J., Nie, S., Zhang, K., & Zhou, Y. (2024). Accurate estimation of biological age and its application in disease prediction using a multimodal image Transformer system. Proceedings of the National Academy of Sciences, 121(3), e2308812120. https://doi.org/10.1073/pnas.2308812120, https://www.pnas.org/doi/full/10.1073/pnas.2308812120